Killer robots. You have probably heard about them. You may also have heard that there is a campaign to stop them. One of the main arguments that proponents of the campaign make is that they will create responsibility gaps in military operations. The problem is twofold: (i) the robots themselves will not be proper subjects of responsibility ascriptions; and (ii) as they gain autonomy, there is more separation between what they do and the acts of the commanding officers or developers who allowed their use, and so less ground for holding these people responsible for what the robots do. A responsibility gap opens up.

The classic statement of this ‘responsibility gap’ argument comes from

Robert Sparrow (2007, 74-75):

…the more autonomous these systems become, the less it will be possible to properly hold those who designed them or ordered their use responsible for their actions. Yet the impossibility of punishing the machine means that we cannot hold the machine responsible. We can insist that the officer who orders their use be held responsible for their actions, but only at the cost of allowing that they should sometimes be held entirely responsible for actions over which they had no control. For the foreseeable future then, the deployment of weapon systems controlled by artificial intelligences in warfare is therefore unfair either to potential casualties in the theatre of war, or to the officer who will be held responsible for their use.

This argument has been debated a lot since Sparrow first propounded it. What is often missing from those debates is some application of the legal doctrines of responsibility. Law has long dealt with analogous scenarios — e.g. people directing the actions of others to nefarious ends — and has developed a number of doctrines that plug the potential responsibility gaps that arise in these scenarios. What’s more, legal theorists and philosophers have long analysed the moral appropriateness of these doctrines, highlighting their weaknesses, and suggesting reforms that bring them into closer alignment with our intuitions of justice. Deeper engagement with these legal discussions could move the debate on killer robots and responsibility gaps forward.

Fortunately, some legal theorists have stepped up to the plate. Neha Jain is one example. In her recent paper

‘Autonomous weapons systems: new frameworks for individual responsibility’, she provides a thorough overview of the legal doctrines that could be used to plug the responsibility gap. There is a lot of insight to be gleaned from this paper, and I want to run through its main arguments in this post.

1. What is an autonomous weapons system anyway?

To get things started we need a sharper understanding of robot autonomy and the responsibility gap. We’ll being with the latter. The typical scenario that is imagined by proponents of the gap is where some military officer or commander has authorised the battlefield use of an autonomous weapons system (or AWS), that AWS has then used its lethal firepower to commit some act that, if it had been performed by a human combatant, would almost certainly be deemed criminal (or contrary to the laws of war).

There are two responsibility gaps that arise in this typical scenario. There is the gap between the robot and the criminal/illegal outcome. This gap arises because the robot cannot be a fitting subject for attributions of responsibility.

I looked at the arguments that can be made in favour of this view before. It may be possible, one day, to create a robot that meets all the criteria for moral personhood, but this is not going to happen for a long time, and there may be reason to think that we would never take claims of robot responsibility seriously. The other gap arises because there is some normative distance between what the AWS did and the authorisation of the officer or commander. The argument here would be that the AWS did something that was not foreseeable or foreseen by the officer/commander, or acted beyond their control or authorisation. Thus, they cannot be fairly held responsible for what the robot did.

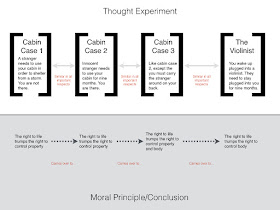

I have tried to illustrate this typical scenario, and the two responsibility gaps associated with it, in the diagram below. We will be focusing the gap between the officer/commander and the robot for the remainder of this post.

As you can see, the credibility of the responsibility gaps hinges on how autonomous the robots really are. This prompts the question: what do we mean when we ascribe ‘autonomy’ to a robot? There are two competing views. The first describes robot autonomy as being essentially analogous to human autonomy. This is called ‘strong autonomy’ in Jain’s paper:

Strong Robot Autonomy: A robotic system is strongly autonomous if it is ‘capable of acting for reasons that are internal to it and in light of its own experience’ (Jain 2016, 304).

If a robot has this type of autonomy it is, effectively, a moral agent, though perhaps not a

responsible moral agent due to certain incapacities (more on this below). A responsibility gap then arises between a commander/officer and a strongly autonomous robot in much the same way that a responsibility gap arises between two human beings.

A second school of thought rejects this analogy-based approach to robot autonomy, arguing that when roboticists describe a system as ‘autonomous’ they are using the term in a distinct, non-analogous fashion. Jain refers to this as emergent autonomy:

Emergent Robot Autonomy: A robotic system is emergently autonomous if its behaviour is dependent on ‘sensor data (which can be unpredictable) and on stochastic (probability-based) reasoning that is used for learning and error correction’ (Jain 2016, 305)

This type of autonomy has more to do with the dynamic and adaptive capabilities of the robot, than with its powers of moral reasoning and its capacity for ‘free’ will. The robot is autonomous if it can be deployed in a variety of environments and can respond to the contingent variables in those environments in an adaptive manner. Emergent autonomy creates a responsibility gap because the behaviour of the robot is unpredictable and unforeseeable.

Jain’s goal is to identify legal doctrines that can be used to plug the responsibility gap no matter what type of autonomy we ascribe to the robotic system.

2. Plugging the Gap in the Case of Strong Autonomy

Suppose a robotic system is strongly autonomous. Does this mean that the officer/commander that deployed the system cannot be held responsible for what it does? No; in fact legal systems have long dealt with this problem, developing two distinct doctrines for dealing with it. The first is the doctrine of

innocent agency or

perpetration; the second is the doctrine of

command responsibility.

The doctrine of innocent agency or perpetration is likely to be less familiar. It describes a scenario in which one human being (the principal) uses another human being (or, as we will see, a human-run organisational apparatus) to commit a criminal act on their behalf. Consider the following example:

Poisoning-via-child: Grace has grown tired of her husband. She wants to poison him. But she doesn’t want to administer the lethal dose herself. She mixes the poison in with sugar and she asks her ten-year-old son to ‘put some sugar in daddy’s tea’. He dutifully does so.

In this example, Grace has used another human being to commit a criminal act on her behalf. Clearly that human being is innocent — he did not know what he was really doing — so it would be unfair or inappropriate to hold him responsible (contrast with a hypothetical case in which Grace hired a hitman to do her bidding). Common law systems allow for Grace to be held responsible for the crime through the doctrine of innocent agency. This applies whenever one human being uses another human being with some dispositional or circumstantial incapacity for responsibility to perform a criminal act on their behalf. The classic cases involve taking advantage of another person’s mental illness, ignorance or juvenility.

Similarly, but perhaps more interestingly, there is the civil law doctrine of

perpetration. This doctrine covers cases in which one individual (the indirect perpetrator) gets another (the direct perpetrator) to commit a criminal act on their behalf. The indirect perpetrator uses the direct perpetrator as a tool and hence the direct perpetrator must be at some sort of disadvantage or deficit relative to the indirect perpetrator. The German Criminal Code sets this out in Section 25 and has some interesting features:

Section 25 of the Strafgesetzbuch The Vordermann is the indirect perpetrator. He or she uses a Hintermann as a direct perpetrator. The Vordermann possesses Handlungsherrschaft (act hegemony) and exercises Willensherrschaft (domination) over the will of the Hintermann.

Three main types of

willensherrschaft are recognised: (i) coercion; (ii) taking advantage of a mistake made by the

hintermann or (iii) possessing control over some organisational apparatus (

Organisationsherrschaft). The latter is particularly interesting because it allows us to imagine a case in which the direct perpetrator uses some bureaucratic agency to carry out their will. It is also interesting because Article 25 of the Rome Statute establishing the International Criminal Court recognises the doctrine of perpetration and the ICC has held in their decisions that it covers perpetration via organisational apparatus.

Let’s now bring it back to the issue at hand. How do these doctrine apply to killer robots and the responsibility gap? The answer should be obvious enough. If robots possess the strong form of autonomy, but they have some deficit that prevents them from being responsible moral agents, then they are, in effect, like the innocent agents or direct perpetrators. Their human officers/commanders can be held responsible for what they do, through the doctrine of perpetration, provided those officers/commanders intended for them to do what they did, or knew that they would do what they did.

The problem with this, however, is that it doesn’t cover scenarios in which the robot acts outside or beyond the authorisation of the officer/commander. To plug the gap in those cases you would probably need the doctrine of

command responsibility. This is a better known doctrine, though it has been controversial. As Jain describes it, there are three basic features to command responsibility:

Command Responsibility: A doctrine allowing for ascriptions of responsibility in cases where (a) there is a superior-subordinate relationship where the superior has effective control over the subordinate; (b) the superior knew or had reason to know (or should have known) of the subordinates’ crimes and (c) the superior failed to control, prevent or punish the commission of the offences.

Command responsibility covers both military and civilian commanders, though it is usually applied more strictly in the case of military commanders. Civilian commanders must have known of the actions of the subordinates; military commanders can be held responsible for failing to know when they should have known (a so-called ‘negligence standard’).

Command responsibility is well-recognised in international law and has been enshrined in Article 28 of the Rome Statute on the International Criminal Court. For it to apply, there must be a causal connection between what the superior did (or failed to do) and the actions of the subordinates. There must also be some

temporal coincidence between the superior’s control and the subordinates’ actions.

Again, we can see easily enough how this could apply to the case of the strongly autonomous robot. The commander that deploys that robot could held responsible for what it does if they have effective control over the robot, if they knew (or ought to have known) that it was doing something illegal, and if they failed to intervene and stop it from happening.

The problem with this, however, is that it assumes the robot acts in a rational and predictable manner — that its actions are ones that the commander could have known about and, perhaps, should have known about. If the robot is strongly autonomous, that might hold true; but if the robot is emergently autonomous, it might not.

3. Plugging the Gap in the Case of the Emergent Autonomy

So we come to the case of emergent autonomy. Recall, the challenge here is that the robot behaves in a dynamic and adaptive manner. It responds to its environment in a complex and unpredictable way. The way in which it adapts and responds may be quite opaque to its human commanders (and even its developers, if it relies on certain machine learning tools) and so they will be less willing and less able to second guess its judgments.

This creates serious problems when it comes to plugging the responsibility gap. Although we could imagine using the doctrines of perpetration and/or command responsibility once again, we would quickly be forced to ask whether it was right and proper to do so. The critical questions will relate to the mental element required by both doctrines. I was a little sketchy about this in the previous section. I need to be clearer now.

In criminal law, responsibility depends on satisfying certain mens rea (mental element) conditions for an offence. In other words, in order to be held responsible you must have intended, known, or been reckless/negligent with respect to some fact or other. In the case of murder, for example, you must have intended to kill or cause grievous bodily harm to another person. In the case of manslaughter (a lesser offence) you must have been reckless (or in some cases grossly negligent) with respect to the chances that your action might cause another’s death.

If we want to apply doctrines like command responsibility to the case of an emergently autonomous robot, we will have to do so via something like the

recklessness or

negligence mens rea standards. The traditional application of the perpetration doctrine does not allow for this. The principal or vordermann must have intention or knowledge with respect to the elements of the offence committed by the hintermann. The command responsibility doctrine does allow for the use of recklessness and negligence. In the case of civilian commanders, a recklessness mental element is required; in the case of military commanders, a negligence standard is allowed. So if we wanted to apply perpetration to emergently autonomous robots, we would have to lower the mens rea standard.

Even if we did that it might be difficult to plug the gap. Consider recklessness first. There is no uniform agreement on what this mental element entails. The uncontroversial part of it is that in order to be reckless one must have recognised and disregarded a substantial risk that the criminal act would occur. The controversy arises over the standards by which we assess whether there was a consciously disregarded substantial risk. Must the person whose conduct led to the criminal act have recognised the risk as substantial? Or must he/she simply have recognised a risk, leaving it up to the rest of us to decide whether the risk was substantial or not? It makes a difference. Some people might have different views on what kinds of risks are substantial. Military commanders, for instance, might have very different standards from civilian commanders or members of the general public. What we perceive to be a substantial risk might be par for the course for them.

There is also disagreement as to whether the defendant must consciously recognise the specific type of harm that occurred or whether it is enough that they recognised a general category of harm into which the specific harm fits. So, in the case of a military operation gone awry, must the commander have recognised the general risk of collateral damage or the specific risk that a particular, identified group of people, would be collateral damage? Again, it makes a big difference. If it is the more general category that must be recognised and disregarded, it will be easier to argue that commanders are reckless.

Similar considerations arise in the case of negligence. Negligence covers situations where risks were not consciously recognised and disregarded but ought to have been. It is all about standards of care and deviations therefrom. What would the reasonable person or, in the case of professionals, the reasonable professional have foreseen? Would the reasonable military commander have foreseen the risk of an AWS doing something untoward? What if it is completely unprecedented?

It seems obvious enough that the reasonable military commander must always foresee

some risk when it comes to the use of AWSs. Military operations always carry some risk and AWSs are lethal weapons. But should that be enough for them to fall under the negligence standard? If we make it very easy for commanders to be held responsible, it could have a chilling effect on both the use and development of AWSs.

That might be welcomed by the Campaign against Killer Robots, but not everyone will be so keen. They will say that there are potential benefits to this technology (think about the arguments made in favour of self-driving cars) and that setting the mens rea standard too low will cut us off from these benefits.

Anyway, that’s it for this post.