A couple of years ago I wrote a series of posts about Nicholas Agar’s book Humanity’s End: Why we should reject radical enhancement. The book critiques the arguments of four pro-enhancement writers. One of the more interesting aspects of this critique was Agar’s treatment of mind-uploading. Many transhumanists are enamoured with the notion of mind-uploading, but Agar argued that mind-uploading would be irrational due to the non-zero risk that it would lead to your death. The argument for this was called Searle’s Wager, as it relied on ideas drawn from the work of John Searle.

This argument has been discussed online in the intervening years. But it has recently been drawn to my attention that Agar and Neil Levy debated the argument in the pages of the journal AI and Society back in 2011-12. Over the next few posts, I want to cover that debate. I start by looking at Neil Levy’s critique of Agar’s Searlian Wager argument.

The major thrust of this critique is that Searle’s Wager, like the Pascalian Wager upon which it is based, fails to present a serious case against the rationality of mind-uploading. This is not because mind-uploading would in fact be a rational thing to do — Levy remains agnostic about this issue — but because the principle of rational choice Agar uses to guide his argument fails to be properly action-guiding. In addition to this, Agar ignores considerations that affect the strength of his argument, and is inconsistent about certain other considerations.

Over this post and the next, I will look at Levy’s critique in some detail. I start out by returning to wager argument, and summarising its essential features. I then look at an important point Levy makes about the different timeframes involved in the wager. Before getting into all that, however, I want to briefly explain what I mean by the phrase “mind-uploading”, and highlight two different methods of uploading. Doing so, will help to head-off certain misconceptions about what Agar and Levy are saying.

Let’s start with the general concept of mind uploading. I understand this to be the process through which your mind (i.e. the mind in which your self/personality is instantiated) is “moved” from its current biological substrate (the brain) to some non-biological substrate (digital computer, artificial brain etc.). As I see it, there are two methods by which this could be achieved:

Copy-and-Transfer: Your mind/brain is copied and then transferred to some technological substrate, e.g. your brain is scanned and monitored over a period of time, and then a digital replica/emulation is created in a supercomputer. This may also involve the destruction of the original copy.

Gradual Replacement: The various parts of your mind/brain are gradually replaced by functionally equivalent, technological analogues, e.g. your frontal lobe and its biological neurons are replaced by a neuroprosthesis with silicon neurons.

Agar’s argument pertains to the first method of uploading, not the second. Indeed, as we shall see, part of the reason he thinks it would be irrational to “upload” is because we would have various neuroprosthetics available to us as well. More on this later.

1. Searle’s Wager

Since I have discussed Searle’s Wager at length before, what follows is a condensed summary, repeating some of the material in my earlier posts. That said, there are certain key differences between Levy’s interpretation of the argument and mine, and I’ll try to highlight those as I go along.

Wager arguments work from principles of rational choice, the most popular and relevant of which is the principle of expected utility maximisation. This holds that, since we typically act without full certainty of the outcomes of our actions, we should pick whichever option yields the highest probability-discounted payoff. For example, suppose I am purchasing two lottery scratch-cards in my local newsagents’ (something I would probably never do, but suppose I have to do it). Suppose they both cost the same amount of money. One of the cards gives me a one-in-ten chance of winning £100, and the other gives me a one-in-a-hundred chance of winning £10,000. Following the principle of maximising expected utility, I should pick the latter option: it yields an expected utility of £100, whereas the the former only yields an expected utility of £10.

You might actually find that result a bit odd. You might think the one-in-ten chance of £100 is, in some sense, a “safer bet”. This is probably because: (a) you are risk averse in decision-making; and (b) you discount higher values of money once they go beyond a certain threshold (i.e. for you, there are diminishing marginal returns of increasing the value of a bet beyond a certain sum). Deviations from expected utility of this sort are not unusual and Agar uses them to his advantage when defending his argument. I’ll get to that point later. In the meantime, I’ll draft a version of the argument that uses the expected utility principle.

The Searlian Wager argument asks us to look at the risks associated with the choice of whether or not to undergo mind-uploading. It says that this choice can take place in one of two possible worlds: (i) a world in which John Searle’s Chinese Room argument is successful and so, at best, Weak AI is true; and (ii) a world in which John Searle’s Chinese Room argument is false and so Strong AI might be true. I’m not going to explain what the Chinese Room argument is, or talk about the differences between Weak and Strong AI; I assume everyone reading this post is familiar with these ideas already. The bottom line is that if Weak AI is true, a technological substrate will not be able to instantiate a truly human mind; but if Strong AI is true, this might indeed be possible.

Obviously, the rational mind-uploader will need Strong AI to be true — otherwise mind-uploading will not be a way in which they can continue their existence in another medium. And, of course, they may have very good reasons for thinking it is true. Nevertheless, there is still a non-zero risk of Weak AI being true. And that is a problem. For if Weak AI is true, then uploading will lead to their self-destruction and no rational mind-uploader will want that. The question then is, given the uncertainty associated with the truth of Strong AI/Weak AI, what should the rational mind-uploader do? Agar argues that they should forgo mind-uploading because the expected utility of doing so is much higher than the expected utility of doing so. This gives us the Searlian Wager:

- (1) It is either the case that Strong AI is true (with probability p) or that Weak AI is true (with probability 1-p); and you can either choose to upload yourself to a computer (call this “U”) or not (call this “~U”).

- (2) If Strong AI is true, then either: (a) performing U results in us experiencing the benefits of continued existence with super enhanced abilities; or (b) performing ~U results in us experiencing the benefits of continued biological existence with whatever enhancements are available to us that do not require uploading.

- (3) If Weak AI is true, then either: (c) performing U results in us destroying ourselves; or (d) performing ~U results in us experiencing the benefits of continued biological existence with whatever enhancements are available to us that do not require uploading.

- (4) Therefore, the expected payoff of uploading ourselves (Eu(U)) is = (p)(a) + (1-p)(c); and the expected payoff of not uploading ourselves (Eu(~U) is = (p)(b) + (1-p)(d).

- (5) We ought to do whatever yields the highest expected payoff.

- (6) Eu(~U) > Eu(U)

- (7) Therefore, we ought not to upload ourselves.

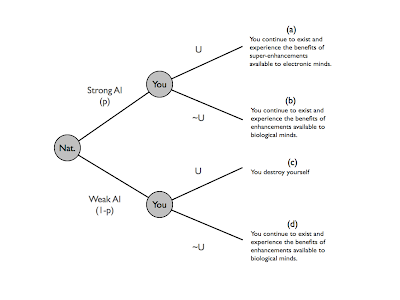

The logical structure of the decision underlying the argument can also be depicted in a decision tree diagram. In this diagram, the first decision node is taken up by N, which represents nature. Nature determines the probabilities that govern the rest of the decision. The second decision node represents the choices facing the rational mind-uploader. The four endpoints represent the possible outcomes for that decision-maker.

The key to the Searlian Wager argument is premise (6). What reasons does Agar give us for thinking that the expected utility of not-uploading are lower than the expected utility of uploading? We got an initial sense of the argument from the preceding discussion — if you upload, you risk self-destruction — but as it turns out, Agar oscillates between two distinct timeframes when he tries to flesh out this argument. Levy takes him to task for it.

2. The Timeframe Problem

Currently, human beings have a problem: we all die. From our perspective, putative mind-uploading technologies might be worth a gamble. If we are going to die anyway, why not have our minds copied and transferred while we are it. We have nothing much to lose and everything to gain. Right?

Right, but as Agar correctly observes, none of us currently faces Searle’s Wager. After all, the technology for putative mind-reading does not exist. So the only people who will face it are future persons (possibly future versions of ourselves). Consequently, when analysing the risks and benefits associated with the choice, we have to look at it from their perspective. That, unfortunately, necessarily involves some speculation about the likely state of other sorts of technologies at that time.

And Agar does not shy away from such speculation. Indeed, I hinted at the sort of speculation in which he engages in my formulation of the argument in the previous section. Look back at the wording of premises (2) and (3). When describing the benefits of not-uploading, both premises talk about the “benefits of continued biological existence with whatever enhancements are available to us that do not require uploading.” This is an important point. Assuming we continue to invest in a stream of enhancement technologies, we can expect that, by the time putative mind-uploading technologies become available, a large swathe of other, biologically-based, radical enhancement technologies will be available. Among those, could be technologies for radical life extension — maybe something along the lines of Aubrey de Grey’s longevity escape velocity will become a reality — which will allow us to continue our existence in our more traditional biological form for an indefinite period of time. Wouldn’t availing of those technologies be a safer and more attractive bet than undergoing mind-uploading? Agar seems to think so. (He also holds that it is more likely that we get these kinds of technologies before we get putative mind-uploading technologies.)

But how can Agar be sure that forgoing mind-uploading will look to be the safer bet? The benefits of mind-uploading might be enormous. Ray Kurweil certainly seems to think so: he says it will allow us to realise a totally new, hyperenhanced form of existence, much greater than we could possibly realise in our traditional biological substrate. These benefits might completely outweigh the existential risks.

Agar responds to this by appealing to the psychological quirk I mentioned earlier on. It can be rational to favour a low-risk, smaller benefit, gamble over a high-risk high benefit gamble (if we modify the expected utility principle to take account of risk-aversion). For example, (this is from Levy, not Agar) suppose you had to choose between: (a) a 100% chance of £1,000,000; and (b) a 1% chance of £150,000,000. I’m sure many people would opt for the first gamble over the second, even though the latter has a higher expected utility. Why? Because £1,000,000 will seem pretty good to most people; the extra value that could come from £150,000,000 wouldn’t seem to be worth the risk. Maybe the choice between uploading and continued biological existence is much like the choice between these two gambles?

This is where things break down for Levy. The attractiveness of those gambles will depend a lot on your starting point (your “anchoring point”). For most ordinary people, the guarantee of £1,000,000 will indeed be quite attractive: they don’t have a lot of money and that extra £1,000,000 will allow them to access a whole range of goods presently denied to them. But what if you are already a multi-millionaire? Given your current wealth and lifestyle an extra £1,000,000 might not be worth much, but an extra £150,000,000 might really make a difference. The starting point changes everything. And so this is the problem for Agar: from our current perspective, the benefits of biological enhancement (e.g. life extension) might seem good enough, but from the perspective of our radically enhanced future descendants, things might seem rather different. The extra benefits of the non-biological substrate might really be worth it.

In essence, the problem is that in defending a suitably-revised form of premise (6), Agar oscillates between two timeframes. When focusing on the benefits of mind-uploading, he looks at it from our current perspective: the non-radically enhanced, death-engendering human beings that we are right now. But when looking at the risks of mind-uploading, he switches to the perspective of our future descendants: the already radically-enhanced, death-defeating human beings that we might become. He cannot have it both ways. He must hold the timeframes constant when assessing the relevant risks and rewards. If he does so, it may turn out that mind-uploading is more rational than it first appears.

Okay, so that’s the first of Levy’s several critiques. He adds to this the observation that, from our current perspective, the real choice is between investing in the development of mind-uploading technologies or not, and argues that there is no reason to think such investment is irrational, particularly if its gains might be so beneficial to us in the future. I’ll skip over that argument here though. In the next post, I’ll move onto the more substantive aspect of Levy’s critique. This has to do with the impact of non-zero probabilities on rational choice.

This comment has been removed by a blog administrator.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDelete