"Ambition makes you look pretty ugly”

(Radiohead, Paranoid Android)

In Act 1, Scene VII of

Macbeth, Shakespeare acknowledges the dark side of ambition. Having earlier received a prophecy from a trio of witches promising that he would ‘be king hereafter’, Macbeth, with some prompting from his wife, has resolved to kill the current king (Duncan) and take the throne for himself. But then he gets cold feet. In a poignant soliloquy he notes that he has no real reason to kill Duncan. Duncan has been a wise and generally good king. The only thing spurring Macbeth to do the deed is his own insatiable ambition:

I have no spur

To prick the sides of my intent but only

Vaulting ambition, which o'erleaps itself

And falls on the other.

(Macbeth, Act 1, Scene VII, lines 27-29)

Despite this, Macbeth ultimately succumbs to his ambition, kills Duncan, and reigns Scotland with increasing despotism and cruelty. His downfall is a warning to us all. It suggests that ambition is often the root of moral collapse.

I have a confession to make. I am deeply suspicious of ambition. When I think of ambitious people, my mind is instantly drawn to Shakespearean examples like Macbeth and Richard III: to people who let their own drive for success cloud their moral judgment. But I appreciate that there is an irony to this. I am often accused (though ‘accusation’ might be too strong) of being ambitious. People perceive my frequent writing and publication, and other scholarly activities, as evidence of some deep-seated ambition. I often tell these people that I don’t think of myself as especially ambitious. In support of this, I point out that I have frequently turned down opportunities for raising my profile, including higher status jobs, and more money. Surely that’s the opposite of ambition?

Whatever about my own case, I find that ambition is viewed with ambivalence among my academic colleagues. When they speak of ambition they speak with forked tongues. They comment about the ambition of their peers with a mixture of suspicion and envy. They begrudgingly admire the activity and industriousness of the ambitious academic, but then suspect their motives. Perhaps the ambitious academic doesn’t really care about their research? Perhaps their research isn’t that good but this is masked beneath a veil of hyper-productivity? Maybe they are in it for the (admittedly limited) fame and glory? And yet, despite the ambivalence about ambition, they all seem to agree that idleness would be worse. The idle academic is seen as a pariah, living off the backs of others and taking up space that could be occupied by any one of the large number of ambitious, unemployed and freshly-minted PhDs.

All of which sets me thinking: am I right to be suspicious of ambition? Does ambition make us all look pretty ugly? Or is there some virtue in it? I’ll try to answer these questions in what follows.

1. What is ambition?

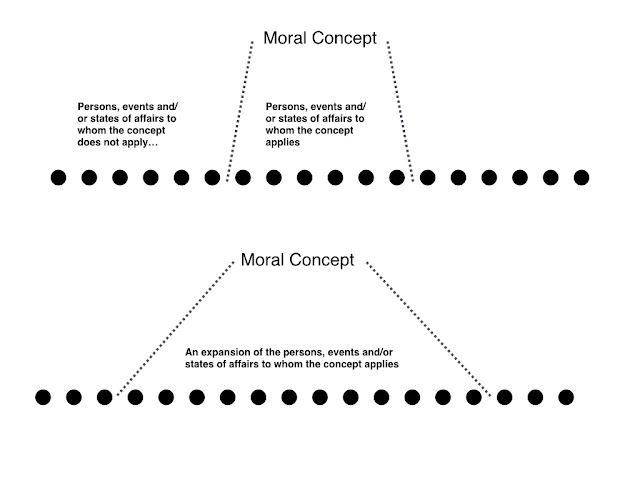

It would help if we had a clearer definition of what ambition is. As I see it, there are two ways to define ambition. The first is relatively neutral and sees ambition as a combination of desire and action; the second is more value-laden and sees ambition as a combination of specific desires and character traits. I’ll use the common philosophical terminology and refer to these two different senses of ambition as being ‘thin’ and ’thick’. Here’s a more precise characterisation of both:

Thin Ambition = A strong desire to succeed in some particular endeavour(s) or enterprise(s), that is backed up by some committed action.

Thick Ambition: A strong desire for certain conventionally recognised forms of personal success (e.g. money, fame, power), that is backed up by a certain style of committed action (particularly ruthless and uncompromising action).

A couple of words about these definitions. Thin ambition has two elements to it: the desire to succeed, and the translation of that desire into some committed action plan. The second element is included in order to distinguish ambition from wishful thinking (Pettigrove 2006). The first element is, as noted, content neutral. It is a desire for success of some kind without any specification of what the object of that desire must be. In other words, following this definition, it would be possible to be ambitious about anything. I might, for example, be a really ambitious stamp collector. I might want to amass the world’s largest and most impressive collection of stamps. This could be the sole focus of my every waking hour. I would still deserve to be called ‘ambitious’, even though the object of my desire (stamp collecting) is not something we usually talk about in terms of ambition. Thin ambition is a pure, pared-down form of ambition.

Given this, you may think that ‘thin’ ambition constitutes the essence of ambition and that we don’t need the thicker, value-laden form of ambition. But I disagree. I think we need the thicker form because when people generally talk about ambition — ‘X is really

ambitious’ — they seem to have the thicker form of ambition in mind. In that context, the word ‘ambition’ carries lots of connotations, many of them quite negative. This negativity stems from the fact that people associate the desire to succeed with particular kinds of objects (usually: the desire for money, fame and power) and with a particular kind of ruthlessness and single-mindedness in service of the desire. This is why my mind is instantly drawn to the examples of Macbeth and Richard II when I hear the word ‘ambition’. It’s also why I probably recoil from being called ‘ambitious’ and feel the need to argue that I am not.

This distinction between ‘thin’ and ‘thick’ ambition appears to give us an easy answer to the question of whether ambition is a good or bad thing. If you are talking about thick ambition, then it is more than likely a bad thing. If you are talking about thin ambition, then it is less clearcut. It all really depends on what the ambition is about, i.e. on the object of the desire to succeed. If my ambition is directed purely at securing political power for myself (like Macbeth), then it might be a bad thing. In that case, the power itself is the sole motivation for my actions and I would be willing to do anything in service of that goal, up to and including murdering or crushing my rivals. But if my ambition is directed at being the most effective altruist in the world, then it might not be a bad thing. In that case, my ambition might coincide with a set of outcomes that is likely to make the world a better place. My ambition could be quite virtuous in that scenario.

But this is too quick. The thin and thick distinction doesn’t give us all we need to conduct a proper moral evaluation of ambition.

2. The Six Evaluators of Ambition

In his article, “

Ambitions”, Glen Pettigrove argues that we cannot simply evaluate ambition by focusing on the objects of the desire to succeed. Instead, we have to focus on six different elements of ambition, each one of which plays a part in how we evaluate the ambitious project or individual. Pettigrove’s main point is that there is a good and bad form of each the elements and this then impacts on whether the ambition itself is a good or bad thing.

The first element is the aforementioned “object” of the desire to succeed. What is the ambitious person trying to do? At the risk of repeating myself, some objects are good and some are bad. The desire to succeed at being a despotic dictator or serial killer is bad; the desire to succeed at curing heart disease or cancer is good. Some desires could also be value neutral and hence unobjectionable. If we could direct ambition toward positive objects, then we might welcome ambition. If ambition tends to get sucked up by negative objects, then we might not. In the latter respect, Pettigrove suggests that there is a tendency for ambition to be directed toward certain “bottomless” or “unending desires”. In other words, ambitious people have a tendency to want things that they can never get enough of, e.g. fame or money. This might have negative repercussions for the individual (and for society) if it means that they never feel satisfied and don’t know when to quit. That said, bottomless desires are not always a bad thing. The desire to do more and more good deeds, or acquire more and more knowledge, for example, doesn’t strike me as a bad thing and might provide the basis for a good, yet insatiable, form of ambition.

The second element is the individual’s

knowledge of the object of the ambition. Do they know whether the object of their desire is good or bad? All else being equal, it is better if the person knows the moral quality of what it is they are doing (if it is good), and doesn’t know it (if it is bad). If the ambitious despot doesn’t know that what they want is bad for others then it might provide some grounds for excuse (though, of course, this depends on other factors). If the ambitious cancer doctor has no idea whether what she is doing is good or bad, then it might lower our estimation of what they are doing. Of course, most of us act under various conditions of uncertainty or probability, which complicates the evaluation. I think this is a real problem for academics. At least it is for me. For the vast majority of things that I do (teaching, research, writing etc), I either have no idea whether it is good or bad, or I am very unsure of this. I’m often throwing darts into the dark.

The third element is the individual’s

motivation for doing the ambitious thing. Suppose that the object of the ambition is good (e.g. as in the case of the ambitious cancer doctor). What actually motivates the person to pursue that object? Most of us act for multiple reasons: because we value the goal/outcome of our actions, because we are bored, because we want money, because we are afraid to fail, because our friends and family told us to, because we want to be better than others, and so on. Pettigrove argues that it is generally better when (a) the motivation is intrinsic to the object, i.e. the object is pursued for its own sake and (b) the motivation is authentic to the individual, i.e. not something imposed upon them from the outside. The problem is that many ambitious people act for other reasons. Gore Vidal famously said that “it is not enough to succeed; others must fail”, and Morrissey echoed him by singing that “we hate it when our friends become successful”. I suspect both could serve as slogans for ambitious people. Oftentimes ambitious projects are pursued out of the fear of failure and the desire to be better than others. This is hardly laudable. That said, Pettigrove argues that we shouldn’t be too quick to judge on this score. Since people have multiple motivations, they could act for several at the same time, some good and some bad. Furthermore, some motivations that might seem bad at first glance (e.g. competitiveness) could be judged good following a deeper investigation (e.g. because some forms of competition are harmless and a spur to innovation).

The fourth element is the actual outcome of the ambition. How does it change the world? Obviously enough, if the outcome is very bad, then this might affect our evaluation of the ambition. This is true even if the intended object of the ambition was good. A cancer doctor who pushes for a new breakthrough treatment may have the best of intentions, but what if the treatment has very bad effects in the world? That might change how we think about their ambition. Maybe they were misguided by their ambition? Maybe it clouded their judgment and prevented them for appreciating all the negative effects their treatment was having? This is not an uncommon story. However, it also goes without saying that many times we are not able to fully judge the goodness or badness of an outcome: it might be good from some perspectives and bad from others. Furthermore, some outcomes might be effectively neutral.

The fifth element is a great film by Luc Besson…just kidding…the fifth element has to do with the actions that might be required by the ambition. What does the individual have to do to achieve their ambitions? If the means are bad, then this might affect our evaluation, even if the outcome and object are good. This gets us back to the problem of dirty hands/ruthlessness that was outlined earlier on. One of the major indictments of Macbeth is that he has to use ‘dirty hands’ tactics to achieve his ambition. The big question is whether ambition

always requires some degree of ruthlessness and ‘dirty hands’-tactics. I think there is a real is danger of this happening. The ambitious cancer doctor, for example, may become consumed by the goal of curing cancer and start to think that the ends justify the means. They might cut corners on ethical protocols, ignore outlying data, and rail against institutional norms and regulations. Perhaps sometimes this is justified, but many times it will simply be a case of unhinged ambition causing them to lose sight of what is right.

The sixth and final element has to do with the role that ambition plays in the individual’s life. How does the ambitious project structure and give shape to the individual’s life? Pettigrove thinks that ambition often plays a positive role in people’s lives. It provides them with a focus and purpose. It gives them a sense of meaning. This is all to the good. Pettigrove suggests that this is still true even if the other aspects of ambition are all bad. In other words, he suggests that even if ambition is on net bad (based on the other five elements), it will always at least play a positive role in someone’s life by giving it some structure and purpose. That said, I think there is an obvious flipside to this: the case of someone with too many ambitions. They become fragmented across multiple projects, some of which might even be incompatible with each other. Also, being too committed to an ambitious project might be bad if it means you can’t adapt and keep up with changes in both your own life and the world around you. I’ve talked a bit about this before in my posts on

hypocrisy and

life plans.

The takeaway message from Pettigrove’s analysis of ambition is: it’s complicated. There is no easy way to evaluate ambition. You have to consider all six elements and then come up with some relative weighting for the different elements. In many cases, ambition will be neither wholly good nor wholly bad. It will be a mix of good and bad.

3. Implications

So where does that leave me? How should I feel about ambition? On balance, I think it means that I should relax my suspicion of ambition. Ambition definitely has a dark side: it can be directed at the wrong things and become an all-consuming passion that causes us to lose sight of what is right and wrong. But it also, potentially, has a good side. This is a point that Pettigrove repeatedly makes in his article. He suggests that ambition is responsible for a lot of the good things that happen in human history, as well as the bad. It’s very difficult to come up with an objective balance sheet that determines which side of ambition wins out. The most we can do is try to harness ambition in the right direction (or else give up, but that might be worse).

I reflect on this in particular in relation to academia. As I was writing this post, I started to realise that my suspicion of ambition, and my critical reflections on it, are, perhaps, something of a luxury. I have a relatively privileged position in academia. I have a stable, permanent job at a decent university. I have spent years ‘proving’ myself to others through industrious scholarship. I can now afford some time to reflect on the merits of what I am doing. Many of my colleagues and peers are not so lucky. They have no permanent jobs. They stumble from temporary gig to temporary gig. They have to be ambitious to get noticed and to get employment. The system demands it from them. They cannot afford to be idle. As noted above, the idle academic is viewed as the ultimate pariah.

I don’t think we should be sanguine or fatalistic about this state of affairs. I think that the performance management culture in modern universities often encourages and rewards the worst kinds of ambition. In particular, I think that it often incentivises and rewards a destructive and non-virtuous competitiveness among academics. Still, given the demand for ambition and the luxury of idleness, I think it might be possible resist the negative forms of ambition and focus on the good kinds of ambition. After all, success is very difficult to measure in academia. There are many metrics out there, and most people don’t really know how they should be weighted or evaluated. As a result, it might be possible to channel ambition in positive directions and avoid the worst excesses.

I live in hope.