|

| Ralph McQuarrie's original concept art for C3PO |

I always had a soft spot for C3PO. I know most people hated him. He was overly obsequious, terribly nervous, and often annoying. R2D2 was more rougish, resilient and robust. Nevertheless, I think C3PO had his charms. You couldn’t help but sympathise with his plight, dragged along by his more courageous peer into all sorts of adventures, most of which lay well beyond the competence of a simple protocol droid like him.

It seems I wasn’t the only one who sympthasised with C3PO’s plight. Anthony Daniels — the actor who has spent much of his onscreen career stuffed inside the suit — was drawn to the part after seeing Ralph McQuarrie’s original drawings of the robot. He said the drawings conveyed a tremendous sense of pathos. So much so that he felt he had to play the character.

All of this came flooding back to me as I read David Gunkel’s recent article ‘The Other Question: Can and Should Robots Have Rights?’. Gunkel is well-known for his philosophical musings on technology, cyborgs and robots. He authored the ground-breaking book The Machine Question back in 2011, and has recently been dipping his toe into the topic of robot rights. At first glance, the topic seems like an odd one. Robots are simply machines (aren’t they?). Surely, they could not be the bearers of moral rights?

Au contraire. It seems that some people take the plight of the robots very seriously indeed. In his paper, Gunkel reviews four leading positions on the topic of robot rights before turning his attention to a fifth position — one that he thinks we should favour.

In what follows, I’m going to set out the four positions that he reviews, along with his criticisms thereof. I’ll then close by outlining some of my own criticisms/concerns about his proposed fifth position.

1. The Four Positions on Robot Rights

Before I get into the four perspectives that Gunkel reviews, I’m going to start by asking a question that he does not raise (in this paper), namely: what would it mean to say that a robot has a ‘right’ to something? This is an inquiry into the nature of rights in the first place. I think it is important to start with this question because it is worth having some sense of the practical meaning of robot rights before we consider their entitlement to them.

I’m not going to say anything particularly ground-breaking. I’m going to follow the standard Hohfeldian account of rights — one that has been used for over 100 years. According to this account, rights claims — e.g. the claim that you have a right to privacy — can be broken down into a set of four possible ‘incidents’: (i) a privilege; (ii) a claim; (iii) a power; and (iv) an immunity. So, in the case of a right to privacy, you could be claiming one or more of the following four things:

Privilege: That you have a liberty or privilege to do as you please within a certain zone of privacy.

Claim: That others have a duty not to encroach upon you in that zone of privacy.

Power: That you have the power to waive your claim-right not to be interfered with in that zone of privacy.

Immunity: That you are legally protected against others trying to waive your claim-right on your behalf.

As you can see, these four incidents are logically related to one another. Saying that you have a privilege to do X typically entails that you have a claim-right against others to stop them from interfering with that privilege. That said, you don’t need all four incidents in every case.

We don’t need to get too bogged down in these details. The important point here is that when we ask the question ‘Can and should robots have rights?’ we are asking whether they should have privileges, claims, powers and immunities to certain things. For example, you might say that there is a robot right to bodily integrity, which could mean that a robot would be free to do with its body (physical form) as it pleases and that others have a duty not to interfere with or manipulate that bodily form, unless they receive the robot’s acquiescence. Or, if you think that’s silly because robots can’t consent (or can they?) you might limit it to a simple claim-right, i.e. a duty not to interfere without permission from someone given the authority to make those decisions. Legal systems grant rights to people that are incapable of communicating their wishes, or to entities that are non-human, all the time so the notion that robots could be given rights in this way is not absurd.

But that, of course, brings us to the question that Gunkel asks, which is in fact two questions:

Q1 - Can robots have rights?

Q2 - Should robots have rights?

The first question is about the capacities of robots: do they, or could they, have the kinds of capacities that would ordinarily entitle an entity to rights? Gunkel views this as a factual/ontological question (an ‘is’ question). The second question is about whether robots should have the status of rights holders. Gunkel views this as an axiological question (an ‘ought’ question).

I’m not sure what to make of this framing. I’m a fairly staunch moralist when it comes to rights. I think we need to sort out our normative justification for the granting of rights before we can determine whether robots can have rights. Our normative justification of rights would have to identify the kinds of properties/capacities an entity needs to possess in order to have rights. It would then be a relatively simple question of determining whether robots can have those properties/capacities. The normative justification does most of the hard work and is really analytically prior to any inquiry into the rights of robots.

This means that I think there are more-or-less interesting ways of asking the two questions to which Gunkel alludes. The interesting form of the ‘can’ question is really: is it possible to create robots that would satisfy the normative conditions for an entitlement to rights (or have we even already created such robots)? The interesting form of the ‘should’ question is really: if it is possible to create such robots, should we do so?

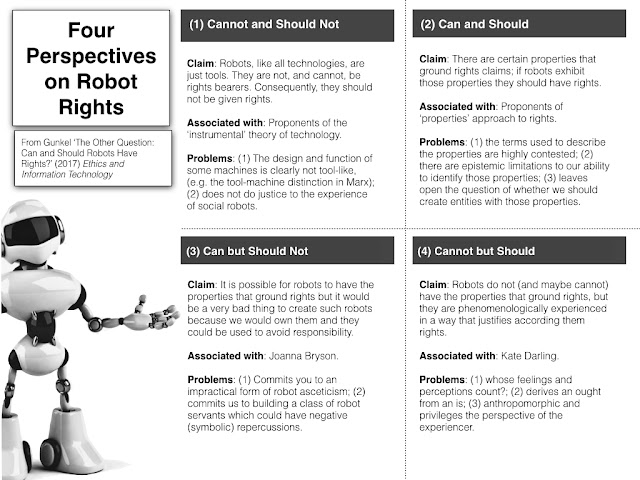

But that’s just my take on it. I still accept that there is an important distinction to be drawn between the ‘can’ and ‘should’ questions and that depending on your answer to them there are four logically possible perspectives on the issue of robot rights: (a) robots cannot and therefore should not have rights; (b) robots can and should have rights; (c) robots can but should not have rights; and (d) robots cannot but should have rights. These four perspectives are illustrated in the following two-by-two matrix below.

Surprisingly enough, each of these four perspectives has its defenders and one of the goals of Gunkel’s article is to review subject each of them to critique. Let’s look at that next.

2. An Evaluation of the Four Perspectives

Let’s start with the claim that robots cannot and therefore should not have rights. Gunkel argues that this tends to be supported by those who view technology as a tool, i.e. as an instrument of the human will. This is a very common view, and is in many ways the ‘classic’ theoretical understanding of technology in human life. If technology is always a tool, and robots are just another form of technology, then they too are tools. And since tools cannot (and should not) be rights-bearers, it follows (doesn’t it?) that robots cannot and should not have rights.

Gunkel goes into the history of this position in quite some detail, but we don’t need to follow suit. What matters for us are his criticisms of it. He has two. The first is simply that the tool/instrumentalist view seems inadequate when it comes to explaining the functionality of some technologies. Even as far back as Hegel and Marx, distinctions were drawn between ‘machines’ and ‘tools’. The former could completely automate and replace a human worker, whereas the latter would just complement and assist one. Robots are clearly something more than mere tools: the latest ones are minimally autonomous and capable of learning from their mistakes. Calling them ‘tools’ would seem inappropriate. The other criticism is that the instrumentalist view seems particularly inadequate when it comes to describing advances in social robotics. People form close and deep attachments to social robots, even when the robots are not designed to look or act in ways that arouse such an emotional response. Consider, for example, the emotional attachments soldiers form with bomb disposal robots. There is nothing cute or animal-like about these robots. Nevertheless, they are not experienced as mere tools.

This brings us to the second perspective: that robots can and so should have rights. This is probably the view that is most similar to my own. Gunkel describes this as the ‘properties’ approach because proponents of it follow the path I outlined in the previous section: they first determine the properties that they think an entity must possess in order to count as a rights-bearer; and they then figure out whether robots exhibit those properties. Candidate properties include things like autonomy, self-awareness, sentience etc. Proponents of this view will say that if we can agree that robots exhibit those properties then, of course, they should have rights. But most say that robots don’t exhibit those properties just yet.

Gunkel sees three problems with this. First, the terms used to describe the properties are often highly contested. There is no single standard or agreement about the meaning of ‘consciousness’ or ‘autonomy’ and it is hard to see them being decidable in the future. Second, there are epistemic limitations to our ability to determine whether an entity possesses these properties. Consciousness is the famous example: we can never know for sure whether another person is conscious. Third, even if you accept this approach to the question there is still an important ethical issue concerning the creation of robots that exhibit the relevant properties: should we create such entities?

(For what it’s worth: I don’t see any of these as being significant criticisms of the ‘properties’ view. Why not? Because if they are problems for the ascription of rights to robots they are also problems for the ascription of rights to human beings. In other words: they raise no special problems when it comes to robots. After all, it is already the case that we don’t know for sure whether other humans exhibit the relevant properties, and there is a very active debate about the ethics of creating humans that exhibit these properties. If it is the properties that matter, then the specific entity that exhibits them does not.)

The third perspective says that robots can but should not have rights. This is effectively the view espoused by Joanna Bryson. Although she is somewhat sceptical of the possibility of robots exhibiting the properties needed to be rights-bearers, she is willing to concede the possibility. Nevertheless, she thinks it would be a very bad idea to create robots that exhibit these properties. In her most famous article on the topic, she argues that robots should always be ‘slaves’. She has since dropped this term in favour of ‘servants’. Bryson’s reasons for thinking that we should avoid creating robots that have rights are multifold. She sometimes makes much of the fact that we will necessarily be the ‘owners’ of robots (that they will be our property), but this seems like a weak grounding for the view that robots should not have rights given that property rights are not (contra Locke et al) features of the natural order. Better is the claim that the creation of such robots will lead to problems when it comes to responsibility and liability for robot misdeeds, and that they could be used to deceive, manipulate or mislead human beings — though neither of these in entirely persuasive to me.

Gunkel has two main criticisms of Bryson’s view. The first — which I like — is that Bryson is committed to a form of robot asceticism. Bryson thinks that we should not create robots that exhibit the properties that make them legitimate objects of moral concern. This means no social robots with person-like (or perhaps even animal-like) qualities. It could be extremely difficult to realise this asceticism in practice. As noted earlier, humans seem to form close, empathetic relationships with robots that are not intended to pull upon their emotional heartstrings. Consider, once more, the example of soldiers forming close attachments to bomb disposal robots. The other criticism that Gunkel has — which I’m slightly less convinced of — is that Bryson’s position commits her to building a class of robot servants. He worries about the social effects of this institutionalised subjugation. I find this less persuasive because I think the psychological and social effects on humans will depend largely on the form that robots take. If we create a class of robot servants that look and act like C3PO, we might have something to worry about. But robots do not need to exist in an integrated, humanoid (or organism-like) form.

The fourth perspective says that robots cannot but should have rights. This is the view of Kate Darling. I haven’t read her work so I’m relying on Gunkel’s presentation of it. Darling’s claim seems to be that robots do not currently have the properties that we require of rights-bearers, but that they are experienced by human beings in a unique and special way. They are not mere objects to us. We tend to anthropomorphise them, and project certain cognitive capabilities and emotions onto them. This in turn foments certain emotions in our interactions with them. Darling claims that this phenomenological experience might necessitate our having certain ethical obligations to robots. I tend to agree with this, though perhaps because I am not sure how different it really is from the ‘properties’ view (outlined above): whether an entity has the properties of a rights-bearer depends, to a large extent (with some qualifications) on our experience of it. At least, that’s my approach to the topic.

Gunkel thinks that there are three problems with Darling’s view. The first is that if we follow Kantian approaches to ethics, feelings are a poor guide to ethical duties. What’s more, if perception is what matters this raises the question: whose perception counts? What if not everyone experiences robots in the same way? Are their experiences to be discounted? The second problem is that Darling’s approach might be thought to derive an ‘ought’ from an ‘is’: the facts of experience determine the content of our moral obligations. The third problem is that it makes robot rights depend on us — our experience of robots — and not on the properties of the robots themselves. I agree with Gunkel that these might be problematic, but again I tend to think that they are problems that plague our approach to humans as well.

I’ve tried to summarise Gunkel’s criticisms of the four different positions in the following diagram.

3. The Other Perspective

Gunkel argues that none of the arguments outlined above is fully persuasive. They each have their problems. We could continue to develop and refine the arguments, but he favours a different approach. He thinks we should try to find a fifth perspective on the problem of robot rights. He calls this perspective ‘thinking otherwise’ and bases it on the work on Eammuel Levinas. I’ll have to be honest and admit that I don’t fully understand this perspective, but I’ll do my best to explain it and identify where I have problems with it.

In essence, the Levinasian perspective favours an ethics first view of ontology. The four perspectives outlined above all situate themselves within the classic Humean is-ought distinction. They claim that the rights of robots are, in some way, contingent upon what robots are — i.e. that our ethical principles determines what is ontologically important and that, correspondingly, the robot’s ontological properties will determine its ethical status. The Levinasian perspective involves a shift away from that way of thinking — realising that you can only derive obligations from facts. The idea is that we first focus on our ethical responses to the world and then consider the ontological status of that world. It’s easier to quote directly from Gunkel on this point:

According to this way of thinking, we are first confronted with a mess of anonymous others who intrude on us and to whom we are obligated to respond even before we know anything at all about them. To use Hume’s terminology — which will be a kind of translation insofar as Hume’s philosophical vocabulary, and not just his language, is something that is foreign to Levinas’s own formulations — we are first obligated to respond and then, after having made a response, what or who we responded to is able to be determined and identified.

(Gunkel 2017, 10)

I have some initial concerns about this. First, I’m not sure how distinctive or radical this is. It seems broadly similar to an approach that Dan Dennett has advocated for years in relation to the free will debate. His view is that it may be impossible to settle the ontological question of freedom vs determinism and hence we should allow our ethical practices to guide us. Setting that aside, I also have some concerns about the meaning of the phrase ‘obligated to respond’ in the quoted passage. It seems to me that it could be trading on an ambiguity between two different meanings of the phrase, one amoral and one moral. It could be that we are physically obligated to respond: our ongoing engagement with the world doesn’t give us time to settle moral or ontological questions first before coming up with a response. We are pressured to come up with a response and revise and resubmit our answers to the ontological/ethical questions at a later time. That type of obligated response is amoral. If that’s what is meant by the phrase ‘obligated to respond’ in the above passage then I would say it is a relatively banal and mundane idea. The moralised formulation of the phrase would be very different. It would suggest that our obligated response actually has some moral or ethical weight. That’s more interesting — and it might be true in some deep philosophical sense insofar as we can never truly escape or step back from our dynamic engagement with the world — but then I’m not sure that it necessitates a radical break from traditional approaches to moral philosophy.

This brings me to another problem. As described, the Levinasian perspective seems very similar to the one advocated by Kate Darling. After all, she was suggesting that our ethical stance toward social robots should be dictated by our phenomenological experience of them. The Levinasian perspective says pretty much the same thing:

[T]he question of social and moral status does not necessarily depend on what the other is in its essence but on how she/he/it…supervenes before us and how we decide, in the “face of the other” (to use Levinasian terminology), to respond.

(Gunkel 2017, 10)

Gunkel anticipates this critique. He argues that there are two major differences between the Levinasian perspective and Darling’s. The first is that Darling’s perspective is anthropomorphic whereas the Levinasian one is resolutely not. For Darling, our ethical response to social robots is dictated by our emotional needs and by our tendency to project ourselves onto the ‘other’. Levinas thinks that anthropomorphism of this kind is a problem because it denies the ‘alterity’ of the other. This then leads to the second major difference which is that Darling’s perspective maintains the superiority and privilege of the self (the person experiencing the world) and maintains them in a position of power when it comes to granting rights to others. Again, the purpose of the Levinasian perspective is to challenge this position of superiority and privilege.

This sounds very high-minded and progressive, but it’s at this point that I begin to lose the thread a little. I am just not sure what any of this really means in practical and concrete terms. It seems to me that the self who experiences the world must always, necessarily, assume a position of superiority over the world they experience. They can never fully occupy another person’s perspective — all attempts to sympathise and empathise are ultimately filtered through their own experience.

Furthermore, I do not see how deciding on an entity’s rights and obligations could ever avoid assuming some perspective of power and privilege. Rights — while perhaps grounded in deeper ethical truths — are ultimately social constructions that depend on institutions with powers and privileges for their practical enforcement. You can have more-or-less hierarchical and absolute institutions of power, but you cannot completely avoid them when it comes to the protection and recognition of rights. So, I guess, I’m just not sure where the Levinasian perspective ultimately gets us in the robot rights debate.

That said, I know that David is publishing an entire book on this topic next year. I’m sure more light will be shed at that stage.