Here’s a simple thought, but one that I think is quite profound: one’s happiness in life depends, to a large extent, on how one thinks about and navigates the space of possible lives one could have lived. If you have too broad a conception of the space of possibility, you are likely to be anxious and unable to act, always fearing that you are missing out on something better. If you have too narrow a conception of the space of possibility, you are likely to be miserable (particularly if you get trapped in a bad set of branches in the space of possibility) and unable to live life to its full. But it’s not that simple either. Sometimes you have to focus on the negative and sometimes you have to narrow your mindset.

I say this is a profound but simple thought. Why so? Well, it strikes me as profound because it captures something that is fundamentally true about the human condition, something that is integral to a number of philosophical discussions of well-being. It strikes me as simple because I think it’s something that is relatively obvious and presumably must have occurred to many people over the course of human history. And yet, for some reason, I don’t find many people talking about it.

Don’t get me wrong. Plenty of people talk about possible worlds in philosophy and science, and many specific discussions of human life touch upon the idea outlined in the opening paragraph. For example, discussions of human emotions such as regret, or the rationality of decision-making, or the philosophical significance of death, often touch upon the importance of thinking in terms of possible lives. What frustrates me about these discussions is that they don’t do so in an explicit or integrated way.

This article is my attempt to make up for this perceived deficiency. I want to justify my opening claim that one’s happiness in life depends on how one thinks about and navigates the space of possible lives; and I want to further support my assertion that this is a simple and profound idea. I start by clarifying exactly what I am talking about.

1. The Basic Picture: The Space of Possible Lives

The actual world is the world in which we currently live. It can be defined in terms of a list of propositions that exhaustively describes the features of this world. A possible world is a world that could exist. It can be defined as any logically consistent set of propositions describing a world. The space of logically possible worlds is vast. Logical consistency is only a minor constraint on what is possible. Virtually anything goes if this is your only limitation on what is possible. For example, there is a logically possible world in which the only things that exist are an apple and a cat, inside a large box.

This possible world isn’t very likely, of course, and this raises an important point. Possible worlds can be ordered in terms of their accessibility to us. It is easiest to define this in terms of the “distance” between a possible world and the actual world in which we live. Worlds that are ‘close’ to our own world (in the sense that they differ minimally) can be presumed to be relatively accessible to us (though see the discussion below of determinism and free will); contrariwise, worlds that are ‘far away’ (in the sense that they have many differences from our own world) are relatively inaccessible. Some possible worlds will require a technological breakthrough to make them accessible to us (e.g. a world in which interstellar travel is possible for creatures like us); others may never be accessible to us because they breach the fundamental physical laws of our reality (e.g. a world in which universal entropy is reversed). Philosophers often distinguish between these different shades of possibility by using phrases like “physical possibility”, “technical possibility” and so on. Probability is also an important part of the discussion as it gives us a way of quantitatively ranking the accessibility of a possible world.

The idea of a “possible life” can be defined in terms of a possible world. Your actual life is the life you are currently living in the actual world. A “possible life” is simply a different life that you could be living in another possible world. One way of thinking about this is to simply imagine a different possible world where the only differences between it and the actual world relate specifically to your life. Possible lives exist in the past and in the future. There are possible lives that I could have lived and possible lives that I might yet live. For example, there is a possible life where I studied medicine at university rather than law. If I had followed that path, my present life could be very different. Likewise, there is possible life where I run for political office in the future. If I follow that path, my life will end up being very different from what I currently envisage.

Possible lives can be arranged and ranked in a number of different ways. Obviously, they can be ranked in terms of their accessibility to us (as per the previous discussion of possible worlds), or they can be ranked in terms in their normative value to us. Some possible lives are better than others. A possible life in which I murder someone and get sent to jail for life is presumably going to be worse (for me and for others) than a world in which I work hard and discover a cure for some serious disease.

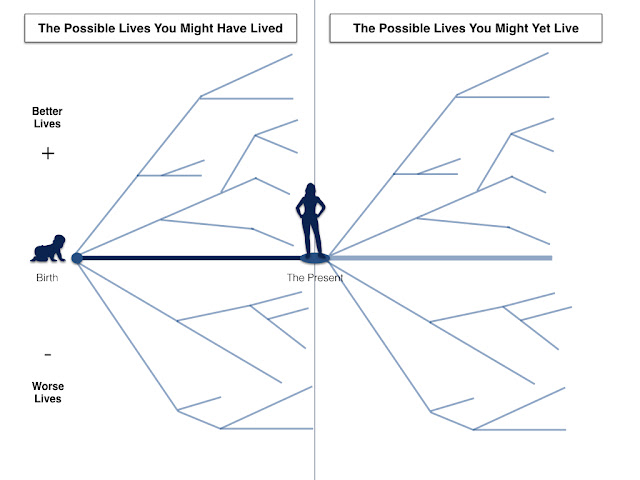

Pictures are worth a thousand words so consider the image below. It illustrates what I would take to be the fundamental predicament of life. In the centre of the image is a person. Let’s suppose this person is you. The thick bold line represents your actual life (i.e. the life, out of all the possible lives you could have lived, that you are actually living). To the left of your present location is your past and arranged along each side of the thick bold line are the possible lives you could have lived before the present moment. To the right of your present location is your future and arranged along each side of the centre line are the possible lives you might yet live. The possible lives that lie above the line represent lives that are better than your current, actual, life; the possible lives that lie below the line represent lives that are worse than your current life. The accessibility of lives can also be represented in this image. We can assume that the further a life lies from the centre line, the less accessible it is (though in saying this it is important to realise that accessibility does not correlate with betterness or worseness, which is an impression you might get from the way in which I have illustrated it).

|

The Human Predicament: The Space of Possible Lives |

The essence of my position is that how we think about our predicament — nested in a latticework of possible lives — will to a large extent determine how happy and successful we are in our actual life. In particular, broadening and narrowing our conception of the set of possible lives we could have lived, and might yet live, is key to happiness.

2. The Elephant in the Room: Determinism

Before I go any further, I need to address the elephant in the room: determinism. Determinism is a philosophical thesis that holds that every event that occurs in this actual world has a sufficient cause of its existence in the prior events in this world. The life you are living today is the product of all the events that occurred prior to the present moment. Given those events, there is no other way the present moment could have turned out. It simply had to be this way.

There is another way of putting this. According to one well-known philosophical definition of determinism — first coined, I believe, by Peter Van Inwagen — determinism is the view that there is only one possible future. Given the full set of past events (E1…En) there is only one possible next event (En+1), because those prior events fully determine the nature and character of En+1).

If determinism is true, it would seem to put paid to the argument I’m trying to put forward in this article. After all, if determinism is true, it would seem to follow that all talk about the possible lives we could have lived, and might yet live, is fantastical poppycock. There is only one life we could ever live and we may as well get used to it.

But I don’t quite see it that way. In this regard, it’s worth remembering that determinism is a metaphysical thesis, not a scientific one. No amount of scientific evidence of deterministic causation can fully confirm the truth of determinism. And, what’s more, there are some prominent scientific theories that seem to be open to some degree of indeterminism (e.g. quantum theory) or, if not that, are at least open to “possible worlds”-thinking. It is worth noting, for example, that some highly deterministic theories in cosmology and quantum mechanics only preserve their determinism if they allow for possibility of multiple universes and many worlds. The most famous example of this might be the “many worlds” interpretation of quantum mechanics, first set out by Hugh Everett. This interpretation retains the determinism of the quantum mechanical Schrödinger equation but only does so by holding that there are many different worlds in existence. These worlds may or may not be accessible to us, but it is not illegitimate to talk about them.

Admittedly, these esoteric aspects of cosmology and quantum theory don’t hold much succour for the kind of position I’m defending here. But that brings me to a more important point. Even if determinism is true (and there is, literally, only one possible future) it does not follow that thinking about one’s life in terms of the possible lives one could have lived and might yet live is illegitimate. If the world is deterministic it is still likely to be causally complex. This means that, even if determinism is true, there will often be no easy way for us to say what caused what and what follows from this.

An analogy might help to underscore this. When I was a student, one of the favoured topics in history class was “The Causes of World War I”. I learned from these classes that there are many putative causes of World War I. It’s hard to say which “cause” was critical, if any. Perhaps World War I was caused by German aggression, or perhaps, as Christopher Clark argues in his book The Sleepwalkers, it was a complex concatenation of events, no one of which was sufficient in its own right. It’s really hard to say. For all we know, in the absence of German aggression, things might have gone very differently. Or maybe they wouldn’t. Maybe we would have stumbled into a great war anyway. Historians and fiction writers love to speculate, and it’s often useful to do so: we gain insight into the past by imagining the counterfactuals, and gain wisdom for the future by thinking through the different possible worlds.

What is true for historians and fiction writers is also true for ourselves when we look at our own lives. Our own lives are causally complex. For any one event that occurred in our past (or that may yet occur in our future) there is probably a whole panoply of events that may or may not be critical to its occurrence. As a result, for all we know, there may have been other lives we could have lived and may yet live. To put this more philosophically, even if it is true that we live in a metaphysically deterministic world in which there is only one possible future, to all intents and purposes we still live in an epistemically indeterministic world in which multiple possible futures seem to still be accessible to us.

In this respect, it is important to bear in mind the distinction between fatalism and determinism. Just because the world is deterministic, does not imply that we play no part in shaping its future. We still make a difference and in order to make sense of the difference we might make, we need to entertain “possible worlds”-thinking.

All of this leads me to conclude that determinism does not scupper the argument I am trying to make.

3. Looking Back: Regret, Guilt and Gratitude

If we accept that it is legitimate to think in terms of possible lives, then we open ourselves up to the idea that thinking wisely about the space of possibility is key to happiness and success. To illustrate, we can start by looking back, i.e. by considering the life we are living in the present moment relative to the other lives we might have lived before the present moment.

If, when we do this, we focus predominantly on possible lives that would have been better than the life we are currently living (along whatever metric of “betterness” we prefer), we are likely to be pretty miserable. We will tend to be struck by the sense that our actual life does not measure up. There are better lives we could have been living. Two emotions/attitudes are commonly associated with this style of thinking. The first is regret. This is both a negative feeling about your present life and a judgment that it is inferior to other possibilities. Regret is usually tied to specific past decisions. We regret making those decisions and judge that we could have done better. Sometimes, regret is more general and vague. There is no specific decision that we regret, but we are filled with the general sense that things are not as good as they could be. When the choices we make end up doing harm to others, regret can turn into a guilt. We can become wracked by the sense that not only are our lives worse than they might have been, but we have failed in our moral duties too.

As I noted on a previous occasion, I find my own thoughts about the past to be preoccupied by feelings of regret and guilt. I regret not making certain decisions earlier in life (e.g. getting married, having children) or not seizing certain opportunities (e.g. better jobs and so on). This regret can sometimes be overwhelming, even though I acknowledge that it is often irrational. Given the aforementioned causal complexity of the real world, there is no guarantee that if I had done things differently they would have turned out for the better. Thinking about regret in these philosophical terms sometimes helps me to escape the trap of negative thinking.

If, when we look to the past, we focus predominantly on possible lives that would have been worse that the life we are currently living, we are likely to pretty happy. I say this with some trepidation. It’s possible that some people have a very low hedonic baseline and so no amount of positive thinking about the past will make them happy, but as a general rule of thumb it seems to follow that happiness flows from focusing on the negative space of possibility in the past. If things could have been much worse than they currently are, then we are likely to think that our present lives are not all that bad. This is, in fact, a classic Stoic tactic for ensuring more contentment in life: always imagine how things might have been worse.

Two emotions/attitudes are commonly associated with this style of thinking. The first is achievement. This is a self-directed emotion and judgment that arises from the belief that you have made your life better than it might otherwise have been. You have charted some stormy waters and navigated a way through the space of possibility that avoided bad outcomes (failure, hardship etc). The second is a feeling of gratitude. This is an other-directed (or outward-directed) emotion and judgment that arises from the belief that although you may not have controlled it, your life has turned out better than it might have done. This could be because other people helped you out, or it could be through sheer luck and accident of birth (though some people might like to distinguish the feeling of luck from that of gratitude).

Given these reflections on looking back, you might think there is an easy way to make yourself happy: focus on how your present life is better than many of the possible lives you could have lived, and don’t focus on how it is worse than others. But that’s easier said than done. Sometimes you can get trapped in spirals of negative thinking where you always think about how things could have been better. Furthermore, focusing entirely on how things might have been worse could well be counterproductive. As I noted in an earlier article not all regret is bad. You can learn a lot about yourself from your regrets. You can learn about your desires and personal values. This is crucial when we start to look forward.

4. Looking Forward: Optimism, Pessimism and Death

Although looking back is a useful practice, and although it is often an important source of self-knowledge, ultimately looking forward is more important. This is because we live our lives in the forward-looking direction. Life is a one-way journey to the future. Until we invent a technology that enables us to actually go back in time, we have to resign ourselves to the fact that our main opportunity for exploring possible lives lies in the future.

When looking forward, one question predominates: which of the many possible futures that we could access will we actually end up accessing?

If, when we ask this question, we focus primarily on possible futures that are better than our present lives, we are likely to be quite optimistic. Indeed, focusing on better possible futures and the things you can do to make them more accessible, might be one of the keys to happiness. On a previous occasion, I looked at Lisa Bortolotti’s “agency” theory of optimism. In defending this theory, Bortolotti noted that many forms of optimism are irrational: assuming the future is going to be better than the past is often epistemically unwarranted. Nevertheless, assuming that you have some control over the future — even if this is epistemically unwarranted from an objective perspective — does seem to correlate with an increased chance of success. Bortolotti cited some famous studies on cancer patients in support of this view. In those studies, the cancer patients that believed they could influence their prospects of recovery, through, for example, dietary changes or exercise or other personal health regimes, generally did better than those with a more fatalistic attitude.

If, on the other hand, we focus primarily on futures that are worse than our present predicament, we are likely to be quite pessimistic. If we think that we are on the brink of some major personal or societal failure, and that there is nothing we can do to avert this outcome, then we will have little to look forward to. But, we have to be cautious in saying this. Blindly ignoring negative futures is a bad idea. There is an old adage to the effect that you have to “plan for the worst and hope for the best”. There must be some truth to that. You need to be aware of the risks you might be running. You need to develop strategies to avoid them. Indeed, this willingness to think about and anticipate negative futures is key to the agency theory of optimism outlined by Bortolotti. The more successful cancer patients are not the ones that bury their heads in the sand about their condition and blithely think everything will turn out for the best. They are often very aware of the dangers. They just assume that there is something they can do to avoid the negative possibilities.

There is another point here that I think is key when looking forward. How narrowly or broadly we frame the set of possible futures can have a significant impact on our happiness. A narrow framing arises when we think that there are only one or two possible futures accessible to us; a broader framing arises when think in terms of larger numbers of possibilities. Generally speaking, narrowly framing the future set of possibilities is a bad thing. It encourages you to think in terms of false dichotomies or tradeoffs (either X happens and everything goes badly or Y happens and everything goes well). If you ever find yourself trapped in a narrow framing, it is usually a good idea to take a step back and try to broaden your framing. For example, when thinking about how you might “balance” career ambitions with home and family life, you might have tendency to narrowly frame the future in terms of an either/or choice: either I have a happy family life or a fulfilling career. But usually choices are more complex than that. There are more possibilities and options to explore. Some of those possible futures might allow for a more harmonious balancing of the two goals.

This is not to say that compromises and tradeoffs are always avoidable. They are not. But it is better to reach that conclusion after a full exploration of the set of possible futures than after a cursory search, particularly when it comes to major life choices. Or so I have found. That said, I also think it is possible to have too broad a framing of the possible futures. You can easily become overwhelmed by the possibilities and paralysed by the number of options. Sometimes a narrow framing concentrates the mind and motivates action. It’s all about finding the right balance: don’t be too narrow-minded, try to focus on the positive, but don’t be too open-minded and ignore the negative either.

Three other points strike me as being apposite when looking forward.

First, I think it is worth reflecting on the role that technology plays in opening up the space of possible futures. I briefly alluded to this earlier on when I pointed out that the development of certain technologies (e.g. interstellar spaceships) might make possible futures accessible to us that we never previously considered. Of course, interstellar spaceships are just a dramatic example of a much more general phenomenon. All manner of technological innovations, from penicillin to international flights to smartphones do the same thing: they give us access to futures that would otherwise have been impossible. That’s often a good thing, it gets us out of small, negative spaces of possibility, but remember that technology usually opens up possible futures on both the positive and negative side of the ledger. There are more possible, better futures and more possible negative futures. Techno-optimists tend to exaggerate the former; techno-pessimists the latter.

Second, it is worth reflecting on the importance of “thinking in bets” when it comes to how we navigate the set of future possibilities. Since we rarely have perfect control over the future, and since there is much that is uncertain about the unfolding of events, we have to play the odds and hedge our bets, rather than fixate on getting things “right”. Those who are more attuned to this style of thinking will tend to do better, at least in the long run. But, again, this is often easier said than done because it requires a more reflective and detached outlook on what happens as a result of any one decision.

Finally, we have to think about death. Death is, for each individual, the end of all possibilities. It has an interesting effect on the space of possible lives. Once you die, the network of possible lives you could have lived or might yet live vanishes. All the branches are pruned away. All that is left is one solid line through the space of possibility. This line represents the actual life you lived. What trajectory does that line take through the space of possibility? Does it veer upwards or downwards (relative to the dimension of betterness or worseness)? Does it end on a high or low? Although I am somewhat sceptical of our capacity to control the total narrative of our lives, I do think it is worth thinking, occasionally, about the overall shape we would like our lives to have. Maintaining a gently sloping upward trajectory seems like more of a recipe for happiness than riding a roller-coaster of emotional highs and lows.

5. Conclusion

So where does that leave us? I hope I have said enough to convince you that thinking in terms of possible lives is central to the well-lived life. I also hope I have said enough to convince you that there is no simple algorithm you can apply to this task. You might suppose that you can thrive by not dwelling on how things might have been better in the past, and think more about how they might be better in the future (and, in particular, about how you might make them better). And I am sure that this simple heuristic might work in some cases. But things are not that straightforward. You have to learn from past mistakes and embrace some feelings of regret. You have choose the wisest framing of the future possibility space to make the best choices. There is no one-size-fits-all approach that will guarantee success and happiness.

You might still argue that all of this is trivial and unhelpful. Maybe that is so, but I still maintain my opening position that there is something profound about the idea. Thinking in terms of possible lives integrates and unites many different fields of philosophical inquiry. It integrates concerns about probability and risk, technology and futurism, the philosophy of the emotions, and the tension between optimism and pessimism. It allows us to reconceive and approach all these debates under the same unifying perspective. That seems pretty insightful to me.