Regular readers will know that I have recently been working my through Erik Wielenberg’s fascinating new book Robust Ethics. In the book, Wielenberg defends a robust non-natural, non-theistic, moral realism. According to this view, moral facts exist as part of the basic metaphysical furniture of the universe. They are sui generis, not grounded in or constituted by other types of fact.

Although it is possible for a religious believer to embrace this view, many do not. One of the leading theistic theories holds that certain types of moral fact — specifically obligations — cannot exist without divine commands (Divine Command Theory or DCT). This is the view defended by the likes of Robert Adams, Stephen Evans, William Lane Craig, Glenn Peoples and many many more.

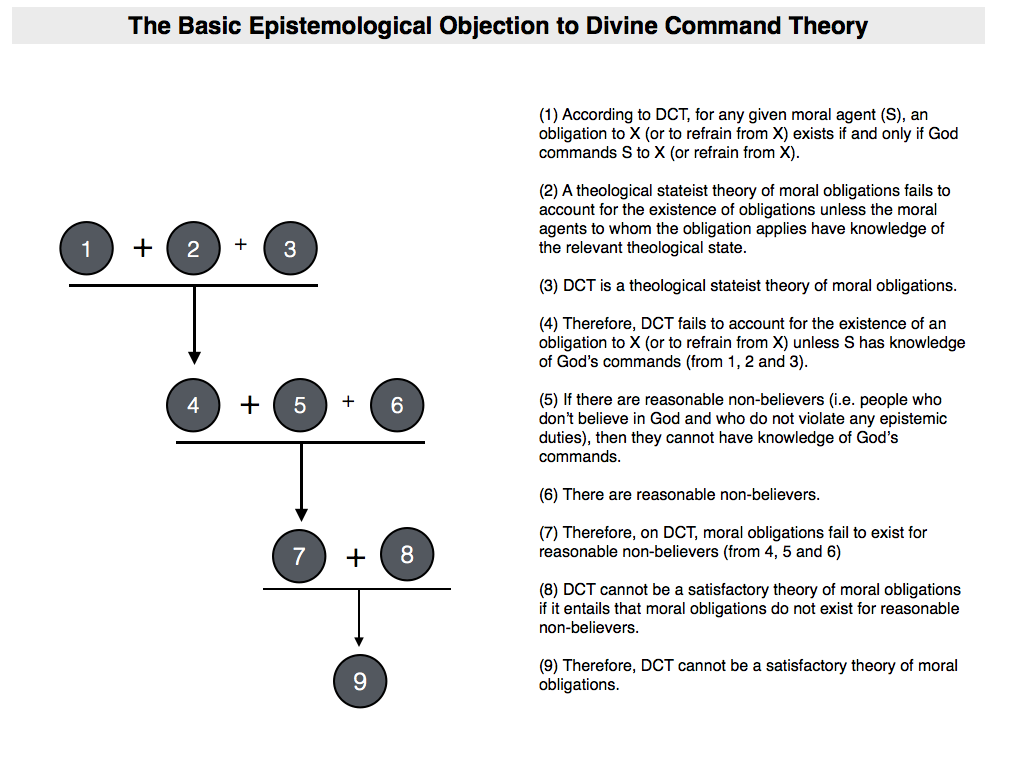

In this post, I want to share one of Wielenberg’s objections to the DCT of moral obligations. This objection holds that DCT cannot provide a satisfactory account of obligations because it cannot account for the obligations of reasonable non-believers. This objection has been defended by others over the years, but Wielenberg’s discussion is the most up-to-date.

That said, I don’t think it is the most perspicuous discussion. So in what follows I’m going to try to clarify the argument in my usual fashion. In other words, you can expect lots of definitions, numbered premises and argument maps. This is going to be a long one.

1. Background: General Problems with Theological Stateism

Theological voluntarism is the name given to a general family of theistic moral theories. Each of these theories holds that a particular moral status (e.g. whether X is good/bad or whether X is permissible/obligatory) depends on one or more of God’s voluntary acts. The divine command theory belongs to this family. In its most popular contemporary form, it holds that the moral status “X is obligatory” depends on the existence of a divine command to do X (or refrain from doing X).

In his book, Wielenberg identifies a broader class of theistic moral theories, which he refers to under the label ‘theological stateism”:

Theological Stateism: The view that particular moral statuses (such as good, bad, right, wrong, permissible, obligatory etc) depend for their existence on one or more of God’s states (e.g. His beliefs, desires, intentions, commands etc).

Theological stateism is broader than voluntarism because the states appealed to may or may not be under voluntary control. For instance, it may be that God necessarily desires or intends that the torturing of innocent children be forbidden. It is not something that he voluntarily wills to be the case. Indeed, the involuntariness of the divine state is something that many theists find congenial because it helps them to avoid the horns of the Euthyphro dilemma (though it may lead to other theological problems). In any event, all voluntarist theories are subsumed within the class of theological stateism.

The foremost defender of the DCT is Robert M. Adams. As mentioned above, he and other DCT believers think that commands are necessary if moral obligations are to exist. The command must take the form of some sign that is communicated to a moral agent, expressing the view that X is obligatory.

Adams offers several interesting arguments in favour of this view. One of the main ones is that without commands we cannot tell the difference between an obligatory act (one that it is our duty to perform) and a supererogatory act (one that is above and beyond the call of duty). Here’s an analogy I have used to explain the gist of this argument:

Suppose you and I draw up a contract stating that you must supply me with a television in return for a sum of money. By signing our names to this contract we create certain obligations: I must supply the money; you must supply the TV. Now suppose that I would really like it if you delivered the TV to my house, rather than forcing me to pick it up. However, it was never stipulated in the contract that you must deliver it to my door. As it happens, you actually do deliver it to my door. What is the moral status of this? The argument here would be that it is supererogatory (above and beyond the call of duty), not obligatory. Without the express statement within the contract, the obligation does not exist.

Adams’s view is that what is true for you and me in the contract, is also true when it comes to our relationship without God. He cannot create obligations unless he communicates the specific content of those obligations to us in the form of a command. This is why Adams critiques other stateist theories such as divine desire theory. He does so on the grounds that they allow for the existence of obligations that have not been clearly communicated to the obligated. He thinks this is not a sound basis for the existence of an obligation.

2. Reasonable Non-Believers and the Epistemological Objection

The fact that communication is essential to Adams’s DCT creates a problem. If there are no communications, or if the communications are unrecognisable (for at least some segment of the population) then moral obligations do not exist (for at least some segment of the population). The claim made by several authors is that this is true for reasonable non-believers, i.e. those who do not believe in God but who do not violate any epistemic duty in their non-belief.

This has sometimes been referred to as the epistemological problem for DCT, but that can be misleading. The problem isn’t simply that reasonable non-believers cannot know their moral obligations. The problem is that, for them, moral obligations simply don’t exist. Though this objection is at the heart of Wielenberg’s discussion, and though it has been discussed by others in the past, I have nowhere seen it formulated in a way that explains clearly how it works or why it is a problem for DCT. To correct for that defect, I offer the following, somewhat long-winded, formalisation:

- (1) According to DCT, for any given moral agent (S), an obligation to X (or to refrain from X) exists if and only if God commands S to X (or refrain from X).

- (2) A theological stateist theory of moral obligations fails to account for the existence of obligations unless the moral agents to whom the obligation applies have knowledge of the relevant theological state.

- (3) DCT is a theological stateist theory of moral obligations.

- (4) Therefore, DCT fails to account for the existence of an obligation to X (or to refrain from X) unless S has knowledge of God’s commands (from 1, 2 and 3)

- (5) If there are reasonable non-believers (i.e. people who don’t believe in God and who do not violate any epistemic duties), then they cannot have knowledge of God’s commands.

- (6) There are reasonable non-believers.

- (7) Therefore, on DCT, moral obligations fail to exist for reasonable non-believers (from 4, 5 and 6)

- (8) DCT cannot be a satisfactory theory of moral obligations if it entails that moral obligations do not exist for reasonable non-believers.

- (9) Therefore, DCT cannot be a satisfactory theory of moral obligations.

A word or two on each of the premises. Premise (1) is simply intended to capture the central thesis of DCT. I don’t think a defender of DCT would object. Premise (2) is based on Adams’s objections to other stateist theories (and, indeed, his more general defence of DCT). As pointed out above, he thinks awareness of the contents of the command is essential if we are to distinguish obligations from other possible moral statuses, and to avoid the unwelcome possibility of people being obliged to do X without being aware of the obligation. Premise (3) follows from the definition of stateist theories, and (4) then follows as an initial conclusion.

That brings us to premise (5), which is the most controversial of the bunch and the one that defenders of the DCT have been most inclined to dispute. We will return to it below. Premise (6) is also controversial. Many religious believers assume that non-believers have unjustifiably rejected God. This is something that has been thrashed out at length in the debate over Schellenberg’s divine hiddenness argument (which also relies on the supposition of reasonable non-belief). I’m not going to get into the debate here. I simply ask that the premise be accepted for the sake of argument.

The combination of (4), (5) and (6) gives us the main conclusion of the argument, which is that DCT entails the non-existence of moral obligations for reasonable non-believers. I’ve tacked a little bit extra on (in the form of (8) and (9)) in order to show why this is such a big problem. I don’t have any real argument for this extra bit. It just seems right to say that if moral obligations exist at all, then they exist for everybody, not just theists. In any event, and as we are about to see, theists have been keen to defend this view, so they must see something in it.

That’s a first pass at the argument. Now let’s consider the views of three authors on the plausibility of premise (5): Wes Morriston, Stephen Evans and Erik Wielenberg.

3. Morriston on Why Reasonable Non-believers Cannot Know God’s Commands

We’ll start with Morriston who has, perhaps, offered the most sustained analysis of the argument. He tries to defend premise (5). To understand his defence, we need to step back for a moment and consider what it means for God to command someone to perform or refrain from performing some act. The obvious way would be for God to literally issue a verbal or written command, i.e. to state directly to us that we should do X or refrain from doing X. He could do this through some authoritative religious text or other unambiguous form of communication (just as I am unambiguously communicating with you right now). The problem is that it is not at all clear that we have such direct verbal or written commands. At the very least, this is something that reasonable non-believers reasonably deny.

As a result of this, most DCT defenders argue that we must take a broader view of what counts as a communication. According to this broader view, the urgings of conscience or deep intuitive beliefs that doing X would be wrong, could count as communications of divine commands. It may be more difficult for the reasonable non-believer to deny that they have epistemic access to those communications.

Morriston thinks that there is a problem here. His view can be summed up by the following argument:

- (10) To know that a sign (e.g. an urging of conscience) is an obligation-conferring command, one must know that the sign emanates from the right source (an agent with the ability to issue such a command).

- (11) A reasonable non-believer does not know that a sign (e.g. an urging of conscience) emanates from the right source.

- (12) Therefore, a reasonable non-believer cannot know whether a sign (e.g. an urging of conscience) is an obligation-conferring command (and therefore (5) is true).

Premise (10) is key here. Morriston derives support for it from Adams’s own DCT. According to Adams, God’s commands have obligation-conferring potential because God is the right sort of being. He has the right nature (lovingkindness and maximal goodness), he has the requisite authority, and we stand in the right kind of relationship to him (he is our creator, he loves us, we prize his friendship and love). It is only in virtue of those qualities that he can confer obligations upon us through his commands. Hence, Morriston is right to say that knowledge of the source is essential if the sign is to have obligation-conferring potential.

Morriston uses a thought experiment to support his point:

Imagine that you have received a note saying, “Let me borrow your car. Leave it unlocked with the key in the ignition, and I will pick it up soon.” If you know that the note is from your spouse, or that it is from a friend to whom you owe a favour, you may perhaps have an obligation to obey this instruction. But if the note is unsigned, the handwriting is unfamiliar, and you have no idea who the author might be, then it’s as clear as day that you have no such obligation.

(Morriston, 2009, 5-6)

And, of course, the problem for the reasonable non-believer is that he/she does not know where the allegedly obligation-conferring signs are coming from. They might think that our moral intuitions arise from our evolutionary origins, not from the diktats of a divine creator.

The upshot of this is that premise (5) looks to be pretty solid.

4. Evans’s Response to Morriston

Stephen C. Evans tries to respond to Morriston. He does so with a lengthy thought experiment:

Suppose I am hiking in a remote region on the border between Iraq and Iran. I become lost and I am not sure exactly what country I am in. I suddenly see a sign, which (translated) reads as follows: “You must not leave this path.” As I walk further, I see loudspeakers, and from them I hear further instructions: “Leaving the path is strictly forbidden”. In such a situation it would be reasonable for me to form a belief that I have an obligation to stay on the path, even if I do not know the source of the commands. For all I know the commands may come from the government of Iraq or the government of Iran, or perhaps from some regional arm of government, or even from a private landowner whose property I am on. In such a situation I might reasonably believe that the commands communicated to me create obligations for me, even if I do not know for sure who gave the commands.

(Evans 2013, p. 113-114)

Evans goes on to say that something similar could be true in the case of God’s commands. They may be communicated to people in a manner that makes it reasonable for them to believe that they have obligation-conferring potential, even if they don’t know for sure who the source of the command is.

Evans’s thought experiment is probably too elaborate for its own good. I’m not sure why it is necessary to set it on the border between Iraq and Iran, or to stipulate that the sign has to be translated. It’s probably best if we simplify its elements. What Evans really seems to be saying is that in any given scenario, if a sign with the general form of a command is communicated to an agent and if it is a live epistemic possibility for that agent that the sign comes from a source with the authority to create obligations (like the government or a landowner) then it is reasonable for that agent to believe that the sign creates an obligation. To express this in an argumentative form:

- (13) In order for an agent to reasonably believe that a sign is an obligation-conferring command, two conditions must be met: (a) the agent must have epistemic access to the sign itself; and (b) it must be a live epistemic possibility for that agent that the sign emanates from a source with obligation-conferring potential.

- (14) A reasonable non-believer can have epistemic access to signs that communicate commands and it is a live epistemic possibility for such agents that the signs emanate from God.

- (15) Therefore, reasonable non-believers can reasonably believe in the existence of God’s obligation-conferring commands (and therefore (5) is false).

5. Wielenberg’s Criticisms of Evans

It is at this point that Wielenberg steps into the debate. And, somewhat disappointingly, he doesn’t have much to say. He makes two brief objections to Evans’s argument. The first is that Evans assumes (as did Morriston) that the sorts of signs available to reasonable non-believers will be understood by them to have a command-like structure. But it’s not clear that this will be the case.

Morriston and Evans both use thought experiments in which the communication to the moral agent takes the form of a sentence with a command-like structure (e.g. “You must not stray from the path”). This means that the agent knows they are being confronted with a command, even if they don’t know where it comes from. The same would not be true of something like a deep moral intuition or an urging of conscience. A reasonable non-believer might simply view that as a hard-wired or learned response to a particular scenario. Its imperative, command-like structure would be opaque to them.

The second point that Wielenberg makes is that Evans confuses reasonable belief in the existence of an obligation with reasonable belief in the existence of an obligation-conferring command. The distinction is subtle and obscured by the hiker thought experiment. In that thought experiment, the hiker comes to believe in the existence of an obligation to stay on the path because they recognise the possibility that the command-like signs they are hearing or seeing might come from a source with obligation-conferring powers. If you cut out the command-like signs — as Wielenberg says you must — you end up in a very different situation. Suppose that the landowner or government has mind control technology. Every time you walk down the path, you are sprayed with a mist of nanorobots that enter your brain and alter your beliefs in such a way that you think you have an obligation to stay on the path. In that case, there is no command-like communication, just a sudden belief in the existence of an obligation. Following Adams’s earlier arguments, that wouldn’t be enough to actually create an obligation: you would not have received the clear command. That’s more analogous to the situation of the reasonable non-believer.

At least, I think that’s how Wielenberg’s criticism works. Unfortunately, he isn’t too clear about it. Nevertheless, I think we can view it as a rebuttal to premise (13) of Evans’s argument.

- (16) The reasonable non-believer cannot recognise the command-like structure of signs such as the urgings of conscience. At best, for them the urgings of conscience create strong beliefs in the existence of an obligation. Under Adams’s theory, strong belief is not enough for the existence of an obligation. There must be a clear command.

6. Concluding Thoughts

I think the epistemological objection to DCT is an interesting one. And I hope my summary of the debate is useful. Hopefully you can now see why the lack of knowledge of a command poses a problem for the existence of obligations under Adams’s modified DCT. And hopefully you can now see how proponents of the DCT try to rebut this objection.

What do I think about this? I’m not too sure. On the whole, the epistemological objection strikes me as something of a philosophical curio. It’s not the strongest or most rhetorically persuasive rebuttal of DCT. Furthermore, I’m unsure of Wielenberg’s contribution to the debate. I feel like this criticism misses one way in which to interpret Evans’s response. I’ll try to explain.

To me, Evans is making a point about moral/philosophical risk and the effect it has on our belief in the existence of a command, not the contents of that command. I’ve discussed philosophical/moral risk in greater depth before. The main idea in discussions of philosophical/moral risk is that where you have a philosophically contentious proposition (like the possible existence of divine commands) there is usually some significant degree of uncertainty as to whether that proposition is true or false (i.e. there are decent arguments on either side). The claim then is that recognition of this uncertainty can lead to interesting conclusions. For instance, you might be have no qualms about killing and eating sentient animals, but if you recognise the risk that this is morally wrong, you might nevertheless be obliged not to kill and eat an animal. The argument for this is that there is a considerable risk asymmetry when it comes to your respective options: eating meat might be perfectly innocuous, but the possibility that it might be highly immoral trumps this possible innocuousness and generates an obligation to not eat meat. Recognition of the risk generates this conclusion.

It might be that Evans’s argument makes similar claims about philosophical risks pertaining to God’s existence and God’s commands. Even if the reasonable non-believer does not believe in the existence of God or in the existence of divine commands, they might nevertheless recognise the philosophical risk (or possibility) that those things exist. And they might recognise it especially when it comes to interpreting the urgings of their own consciences. The result is that they recognise the philosophical risk that a particular sign is an obligation-conferring command, and this recognition is enough the generate the requisite level of knowledge. The fact that they do not really believe that a particular sign has a command-like structure is, contra Wielenberg, irrelevant. What matters is that they recognise the possibility that has such a structure.

Just to be clear, I don’t think this improves things greatly for the defender of DCT. I think it would be very hard to defend the view that mere recognition of such philosophical risks/possibilities is sufficient to generate obligations for the reasonable non-believer (for one thing, there are far too many potential philosophical risks of this sort). Adams’s arguments seem to imply that a reasonable degree of certainty as to the nature of the command is necessary for any satisfactory theory of obligations. Recognition of mere possibilities seems to fall far short of this.